In our previous article, we explored the 7 pitfalls of Financial AI Chatbots. Today, let’s take a closer look at one of the most critical pitfalls: AI hallucination—and why it can have serious consequences for your investors.

AI hallucination happens when LLM-powered AI chatbots generate information that appears factual but is inaccurate, false, or entirely fabricated.

Hallucinations are not bugs—they’re inherent risks of how generative AI works. According to one of OpenAI’s recent tests, its newest o3 and o4-mini models have hallucinated 30-50% of the time.

AI chatbots are increasingly used to analyze financial data, summarize reports, and predict market trends. However, if these chats produce hallucinated outputs—such as fabricated earnings figures or nonexistent news events—investors may make decisions based on false information, leading to potential financial losses.

Financial markets are subject to strict regulations. Relying on AI-generated information that contains hallucinations can result in non-compliance with disclosure requirements or other regulatory standards. This could lead to legal penalties, fines, or sanctions from regulatory bodies.

Widespread use of similar AI models among traders can lead to herd behavior, where many market participants make similar decisions simultaneously. If these AI systems share the same flaws or biases, hallucinations can propagate rapidly, amplifying market volatility and potentially leading to systemic risks.

AI Financial Assistants offer undeniable convenience—but convenience should never come at the cost of accuracy. As a financial service provider, how can you ensure the AI solutions you rely on deliver insights that are timely, trustworthy, and free from hallucinations?

To help you make an informed decision, we've put together a detailed checklist of key questions to ask when assessing AI Financial Assistant vendors.

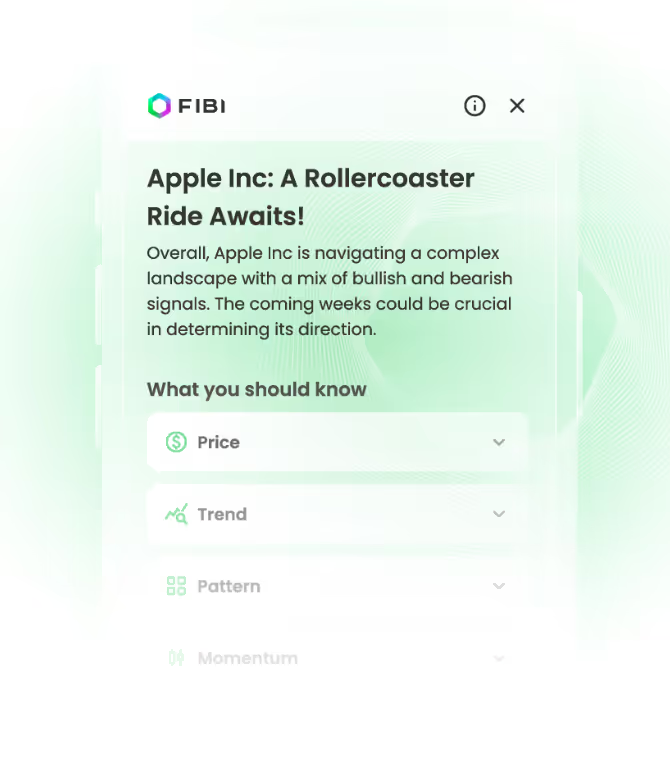

Our unique, closed format AI Assistant, FIBI, avoids these common chatbot pitfalls. Trained by Trading Central’s award-winning financial analysts and fed real-time market data, news, and social media, FIBI offers today’s investors with financial insights that are timely, actionable, compliant, and consistently trained. The closed format ensures users aren’t pulled through endless loops, asking only high quality, investing-specific questions—leading to more predictable and helpful responses.

Interested in adding FIBI to your research suite? Book a demo!